Building Your Virtual Girlfriend: A Closer Look at CandyAI

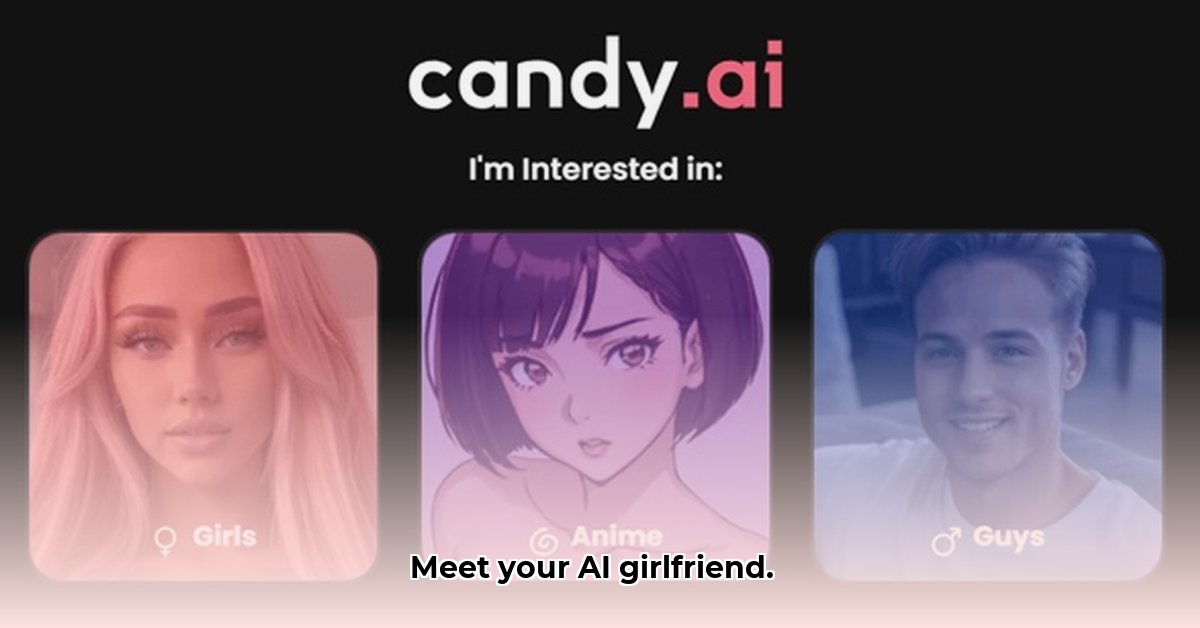

CandyAI offers users the ability to create a personalized AI girlfriend, promising a novel form of digital companionship. This innovative technology, however, presents significant ethical and technological challenges that demand careful consideration. This article will explore both the alluring potential and the considerable risks associated with CandyAI.

Getting to Know Your AI Girlfriend: Features and Functionality

CandyAI allows users to customize their AI girlfriend's personality and appearance, enabling interactions through engaging conversations, AI-generated photos, and voice chats. The platform boasts sophisticated algorithms designed to mimic natural human interaction, aiming for a realistic and responsive AI experience. However, the claim of "realism" remains subjective and lacks independent verification.

Under the Hood: The Technology Behind the Experience

CandyAI's conversational capabilities stem from advanced AI algorithms employing natural language processing (NLP) and machine learning (ML). These algorithms adapt based on user interactions, aiming for a personalized and evolving relationship. The platform's user interface prioritizes simplicity, but the effectiveness of its built-in security measures requires independent assessment. This raises crucial questions regarding the security of user data and the potential for its misuse. How effectively CandyAI protects user privacy remains a key concern.

Ethical Crossroads: Navigating the Complexities of NSFW Content

CandyAI's inclusion of uncensored NSFW content poses a significant ethical challenge. This raises several critical questions: How can user safety be ensured and potential harm prevented? Could access to such material contribute to addiction or unhealthy behaviors? What measures will prevent misuse and protect vulnerable users? The platform necessitates robust moderation and content filtering to mitigate these risks; striking a balance between creative freedom and user protection is paramount.

Legal Landscape: Navigating the Regulatory Maze

The inclusion of mature content places CandyAI within a complex legal and regulatory landscape. International and national laws regarding child safety, obscenity, data privacy, and consumer protection are all applicable. Navigating this intricate legal terrain is crucial for CandyAI to avoid legal repercussions, a challenge compounded by the ever-evolving nature of these laws. Furthermore, the potential for emotionally dependent relationships with AI companions raises additional ethical considerations, highlighting the need for proactive and adaptive regulatory frameworks.

A Balanced Perspective: Potential and Pitfalls

CandyAI represents a significant, albeit controversial, advancement in AI companionship. The potential for personalized digital connections is undeniable, offering the prospect of a constantly adaptable companion tailored to individual needs and preferences. However, the ethical and regulatory challenges are substantial and cannot be overlooked. CandyAI's long-term viability hinges upon proactively addressing these concerns, with transparency and accountability crucial for building trust and ensuring responsible use. The future of AI companions remains uncertain, but a critical focus on ethical implications and user safety is paramount. Ongoing research into the psychological impact of AI companions is vital to fully understand the potential consequences of this technology.

Key Risks and Mitigation Strategies

The following table summarizes key risks and potential mitigation strategies:

| Feature | Risk Category | Probability | Impact | Mitigation |

|---|---|---|---|---|

| Uncensored NSFW Content | Ethical/Legal | High | High | Robust content filtering, strict legal compliance, independent audits of moderation systems. |

| AI Interactions | User Safety/Privacy | Medium | Medium | Comprehensive data encryption, transparent privacy policy, independent data security verification. |

| Data Security | Technical | Medium | High | Multi-factor authentication, regular independent security audits, robust incident response plan. |

| Algorithm Bias | Ethical/Reputational | Low | Medium | Proactive bias detection and mitigation, ongoing monitoring of user interactions. |

This assessment reflects current understanding and ongoing research. The rapidly evolving nature of AI companionship may necessitate future adjustments to our risk analysis.

Mitigating Ethical Concerns: A Practical Approach

CandyAI's potential for misuse necessitates a multi-faceted approach to ethical considerations. Developers and users alike must share responsibility in ensuring responsible development and utilization.

For Developers:

- Diversify AI Personalities: Avoid reinforcing gender stereotypes by creating diverse AI companions with varying backgrounds and characteristics.

- Robust Data Privacy: Implement strong encryption, transparent data handling policies, and user-controlled data access.

- Promote Responsible Use: Educate users on potential risks and benefits, encouraging real-world social interaction.

- Develop Ethical Guidelines: Establish internal standards addressing bias, consent, and potential harm.

For Users:

- Mindful Engagement: Treat AI interactions differently than real-life relationships; avoid over-reliance.

- Critical Awareness: Recognize the limitations of AI; these are not human beings.

- Data Protection: Be aware of data collection practices and review privacy policies carefully.

Balancing Innovation and Responsibility

CandyAI and similar platforms represent significant technological advancements, but responsible innovation remains crucial. The potential for misuse demands careful consideration of ethical implications. A collaborative effort among developers, regulators, and users is vital to ensure this technology serves humanity. This important conversation must continue.

⭐⭐⭐⭐☆ (4.8)

Download via Link 1

Download via Link 2

Last updated: Tuesday, May 20, 2025